Sustainable Accurate Fair and Explainable Machine Learning Models: Difference between revisions

No edit summary Tag: Manual revert |

No edit summary |

||

| Line 1: | Line 1: | ||

{{DISPLAYTITLE:Sustainable, Accurate, Fair and Explainable Machine Learning Models}} | {{DISPLAYTITLE:Sustainable, Accurate, Fair and Explainable Machine Learning Models}}{{#seo:|title=Sustainable, Accurate, Fair and Explainable Machine Learning Models - Top Italian Scientists Journal|description=Machine learning models are currently favouring Artificial Intelligence applications in several fields, such as for instance, in finance|keywords=Artificial Intelligence, Lorenz Zonoids, S.A.F.E. requirement|citation_author=Paolo Giudici; Emanuela Raffinetti|citation_journal_title=Top Italian Scientists Journal|citation_publication_date=2023/04/11|citation_title=Sustainable, Accurate, Fair and Explainable Machine Learning Models|citation_keywords=Artificial Intelligence; Lorenz Zonoids; S.A.F.E. requirement|citation_publisher=Top Italian Scientists|citation_doi=""|}} | ||

<b>Paolo Giudici</b><sup>(a)</sup> and <b>Emanuela Raffinetti</b><sup>(b)</sup> | |||

<sup>(a)</sup> Department of Economics and Management, University of Pavia, Via San Felice al Monastero, 27100 | |||

Pavia, Italy; paolo.giudici@unipv.it | |||

<sup>(b)</sup> Department of Economics and Management, University of Pavia, Via San Felice al Monastero, 27100 Pavia, Italy; emanuela.raffinetti@unipv.it | |||

{| class="wikitable" style="float:right ;border:solid 1px #a2a9b1; background-color: #f8f9fa" cellpadding="5" | |||

|+ <span style="font-size:18px;"></span> | |||

|- | |||

| style="text-align: center;" | [https://files.topitalianscientists.org/wiki/Sustainable_Accurate_Fair_Explainable_Machine_Learning_Models_Giudici_Raffinetti.pdf '''Download PDF'''] | |||

|- | |||

| '''Published''' | |||

|- | |||

| April 11, 2023 | |||

|- | |||

| '''Title''' | |||

|- | |||

| style="width:200px;" | Sustainable, Accurate, Fair and Explainable Machine Learning Models | |||

|- | |||

| '''Authors''' | |||

|- | |||

| Paolo Giudici<sup>(a)</sup> and Emanuela Raffinetti<sup>(b)</sup> | |||

|} | |||

== Abstract == | |||

Machine learning models are currently favouring Artificial Intelligence applications in several fields, such as for instance, in finance. Through the employment of machine learning models, high predictive accuracy is achieved but at the expense of interpretability. The loss of explainability represents a crucial issue, especially in regulated industries, as authorities may not validate Artificial Intelligence methods if they are unable to monitor and limit the risks deriving from them. For this reason and according to the proposed regulations, | |||

high-risk Artificial Intelligence applications based on machine learning must be “trustworthy” and fulfill a set of basic requirements. In this paper, we propose a methodology based on Lorenz Zonoids to assess whether a machine learning model is S.A.F.E.: Sustainable, | |||

Accurate, Fair and Explainable. | |||

== Introduction == | |||

Data driven Artificial Intelligence (AI), boosted by the availability of Machine Learning (ML) models, is rapidly expanding and changing financial services. ML models typically achieve a high accuracy, at the expense of an insufficient explainability<ref>Bussmann, N., Giudici, P., Marinelli, D., Papenbrock, J.: [https://www.doi.org/10.1007/s10614-020-10042-0 Explainable Machine Learning in Credit Risk Management]. ''Comput. Econ''. 57, 203–216 (2020) doi: 10.1007/s10614-020-10042-0</ref><ref>[https://www.bankofengland.co.uk/working-paper/2019/machine-learning-explainability-in-finance-an-application-to-default-risk-analysis Bracke, P., Datta, A., Jung, C., Shayak, S.: Machine learning explainability in finance: an application to default risk analysis (2019)]</ref>. Moreover, according to the proposed regulations, high-risk AI applications based on machine learning must be “trustworthy”, and comply with a set of further requirements, such as Sustainability and Fairness. | |||

To date there are no standardised metrics that can ensure an overall assessment of the trustworthiness of AI applications in finance. To fill the gap, we propose a set of integrated statistical methods, based on the Lorenz Zonoid, the multidimensional extension of the Gini coefficient, that can be used to assess and monitor over time whether an AI application is trustworthy. Specifically, the methods will measure Sustainability (in terms of robustness with respect to anomalous data), Accuracy (in terms of predictive accuracy), Fairness (in terms of prediction bias across different population groups) and explainability (in terms of human understanding and oversight). We apply our proposal to an openly downloadable dataset, that concerns financial prices, to make our proposal easily reproducible. | |||

== Methodology == | |||

Lorenz Zonoids were originally proposed by<ref>Koshevoy, G., Mosler, K.: [https://www.doi.org/10.2307/2291682 The Lorenz Zonoid of a Multivariate Distribution]. ''J. Am. Stat. Assoc.'' 91, 873–882 (1996). doi: 10.2307/2291682</ref> as a generalisation of the Lorenz curve in a multidimensional setting. When referred to the one-dimensional case, the Lorenz Zonoid coincides with the Gini coefficient, a measure typically used for representing the income inequality or the wealth inequality | |||

[[File:Sustainable Accurate Fair Explainable Machine Learning Models Giudici Raffinetti Fig1.jpg|centre]] | |||

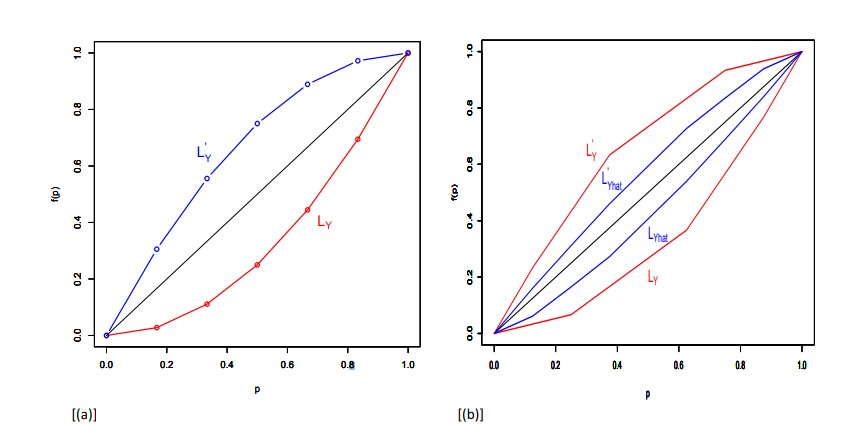

<div style="text-align: center;">Figure 1: [a] The Lorenz Zonoid; [b] The inclusion property: <math>LZ(\hat{Y}) ⊂ LZ(Y)</math></div> | |||

within a nation or a social group<ref>Gini, C.: On the measure of concentration with special reference to income and statistics. General | |||

Series 208, pp. 73-79. Colorado College Publication (1936)</ref><ref name="ref_7">Lorenz, M.O.: [https://doi.org/10.2307/2276207 Methods of measuring the concentration of wealth]. ''Publications of the American Statistical Association'' 70, 209-219 (1905) doi:10.2307/2276207</ref>. Both the Gini coefficient and the Lorenz Zonoid measure statistical dispersion in terms of the mutual variability among the observations, a metric that is more robust to extreme data than the standard variability from the mean. | |||

Given a variable <math>Y</math> and <math>n</math> observations, the Lorenz Zonoid can be defined from the Lorenz (<math>L_Y</math>) and the dual Lorenz curves (<math>L'_Y</math>)<ref name=ref_7/>, whose graphical representations are provided in Fig. 1 [a]. | |||

The Lorenz curve for a variable <math>Y (L_Y)</math>, obtained by re-ordering the <math>Y</math> values in non-decreasing sense, has points whose coordinates can be specified as <math>(i/n, \sum_{j=1}^i yr_j/(n\bar{y}))</math>, for <math>i = 1, . . . , n</math>, where <math>r</math> and <math>/bar{y}</math> indicate the (non-decreasing) ranks of <math>Y</math> and the <math>Y</math> mean value, respectively. Similarly, the dual Lorenz curve of <math>Y (L'_Y)</math>, obtained by re-ordering the <math>Y</math> values in a non-increasing sense, has points with coordinates <math>(i/n, \sum_{j=1}^i yd_j/(n\bar{y}))</math>, for <math>i = 1, . . . , n</math>, where <math>d</math> indicates the (non-increasing) ranks of <math>Y</math>. The area lying between the <math>L_Y</math> and <math>L'_Y</math> curves corresponds to the Lorenz Zonoid, which coincides with the Gini coefficient in the one dimensional case. | |||

From a practical view point, given n observations, the Lorenz Zonoid of a generic variable · is computed through the covariance operator as | |||

{| style="width: 100%;" | |||

| style="width: 90%; text-align: center;"| <math>LZ(·) = \frac{2Cov(·, r(·))}{nE(·)}</math> | |||

| style="width: 10%; text-align: right;"| (1) | |||

|} | |||

where <math>r(·)</math> and <math>E(·)</math> are the corresponding rank score and mean value, respectively. | |||

The Lorenz Zonoid fulfills some attractive properties. An important one is the “inclusion” of the Lorenz Zonoid of any set of predicted values <math>\hat{Y} (LZ(\hat{Y}))</math> into the Lorenz Zonoid of the observed response variable <math>Y (LZ(Y))</math>. The “inclusion property”, whose graphical representation is displayed in Fig. 1 [b], allows to interpret the ratio between the Lorenz Zonoid of a particular predictor set <math>\hat{Y}</math> and the Lorenz Zonoid of Y as the mutual variability of the response “explained” by the predictor variables that give rise to <math>\hat{Y}</math>, similarly to what occurs in the well known variance decomposition that gives rise to the <math>R^2</math> measure. | |||

In this paper, we leverage the inclusion property to derive a machine learning feature selection method that, while maintaining a high predictive accuracy, increases explainability via parsimony and can also improve both sustainability and fairness. More precisely, we present novel scores for assessing both explainability and accuracy. | |||

Given <math>K</math> predictors, a score for evaluating explainability can be defined as: | |||

{| style="width: 100%;" | |||

| style="width: 90%; text-align: center;"| <math>Ex</math>-<math>Score = \frac{\sum_{k=1}^K SL_K}{LZ(Y)} </math> | |||

| style="width: 10%; text-align: right;"| (2) | |||

|} | |||

where <math>LZ(Y)</math> corresponds to the response variable <math>Y</math> Lorenz Zonoid-value, and <math>SL_k</math> denotes the Shapley-Lorenz values associated with the <math>k</math>-th predictor. It is worth noting that, as illustrated in<ref name="ref_5">Giudici, P., Raffinetti, E.: [https://doi.org/10.1016/j.eswa.2020.114104 Shapley-Lorenz eXplainable Artificial Intelligence]. ''Expert Syst. Appl.'' 167, 1–9 (2021) doi: 10.1016/j.eswa.2020.114104</ref>, the Shapley-Lorenz contribution associated with the additional included variable <math>X_k</math> equals to: | |||

{| style="width: 100%;" | |||

| style="width: 90%; text-align: center;"| <math>LZ^{X_k} (\hat{Y}) = \sum_{X'⊆C(X)/X_k} \frac{|X'|!(K − |X'| − 1)!}{K!}[LZ(\hat{Y}_{X'∪X_k}) - LZ(\hat{Y}_{X'})] </math> | |||

| style="width: 10%; text-align: right;"| (3) | |||

|} | |||

where <math>LZ(\hat{Y}_{X'∪X_k})</math> and <math>LZ(\hat{Y}_X')</math> describe the (mutual) variability of the response variable <math>Y</math> explained by the models which, respectively, include the <math>X'∪X_k</math> predictors and only the <math>X'</math>predictors. | |||

In a similar way, and following a cross-validation procedure consisting in splitting the whole dataset | |||

into a train and a test set, the accuracy of the predictions generated by a ML model can be derived as: | |||

{| style="width: 100%;" | |||

| style="width: 90%; text-align: center;"| <math>Ac</math>-<math>Score = \frac{LZ(\hat{Y}_{X_1,...,X_k})}{LZ(Y_{test})}</math> | |||

| style="width: 10%; text-align: right;"| (4) | |||

|} | |||

where <math>LZ(\hat{Y}_{X_1,...,X_k})</math> is the Lorenz Zonoid of the predicted response variable, obtained using <math>k</math> predictors on the test set, and <math>LZ(Y_{test})</math> is the Y response variable Lorenz Zonoid value computed on the same test set. | |||

By exploiting the Shapley-Lorenz values and the set of the predictors which allow to ensure a suitable degree of predictive accuracy, appropriate scores for measuring both fairness and sustainability can be formalised. | |||

== Data == | |||

The considered data are described in<ref name="ref_4">Giudici, P., Abu-Hashish, I: [https://doi.org/10.1016/j.frl.2018.05.013 What determines bitcoin exchange prices? A network var approach]. ''Financ. Res. Lett''. 28, 309–318 (2019). doi: 10.1016/j.frl.2018.05.013]</ref> and are aimed to understand whether and how bitcoin price returns vary as a function of a set of classical financial explanatory variables. | |||

A further investigation of the data was carried out in a work by<ref name="ref_5"/>, who introduced a normalised Shapley measure for the assessment of the contribution of each additional predictor, in terms of Lorenz Zonoids. | |||

The data include a time series of daily bitcoin price returns in the Coinbase exchange, as the target variable to be predicted, and the time series of the Oil, Gold and SP500 return prices, along with those of the exchange rates USD/Yuan and USD/Eur, as candidate explanatory variables. | |||

The aim of the data analysis is to employ the proposed S.A.F.E. metrics derived from the Lorenz Zonoid tool as criteria for measuring the SAFEty of a collection of machine learning models, based on the application of neural networks. | |||

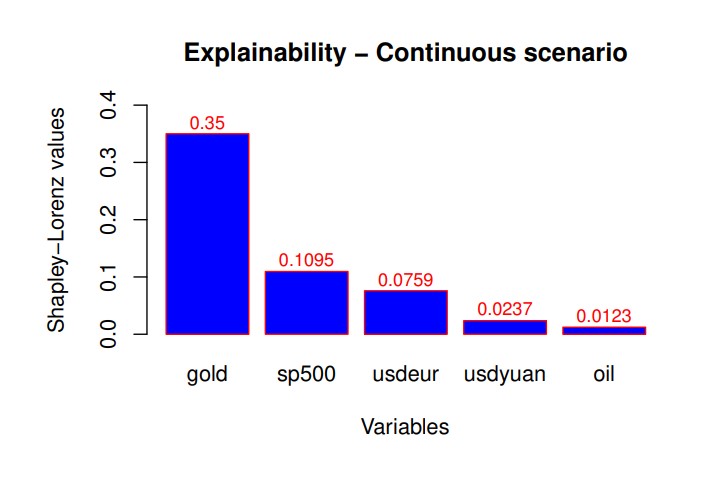

For lack of space, we present only the results that concern explainability, in Fig. 2; and accuracy, in Fig. 3. Both are calculated on the predictions obtained from the application of a neural network model to the data. | |||

Fig. 2 shows the Shapley-Lorenz measure of explainability<ref name="ref_5"/>, which is a normalised extension of the classic Shapley values, for all considered explanatory variables of the daily bitcoin price returns. Fig. 2 clearly highlights that the price returns of Gold is the most important variable that explains bitcoin price return variations, followed by the others. | |||

[[File:Sustainable Accurate Fair Explainable Machine Learning Models Giudici Raffinetti Fig2.jpg|centre]] | |||

<div style="text-align: center;">Figure 2: Explainability of the considered explanatory variables, in terms of the Shapley-Lorenz | |||

measure, for a continuous response.</div> | |||

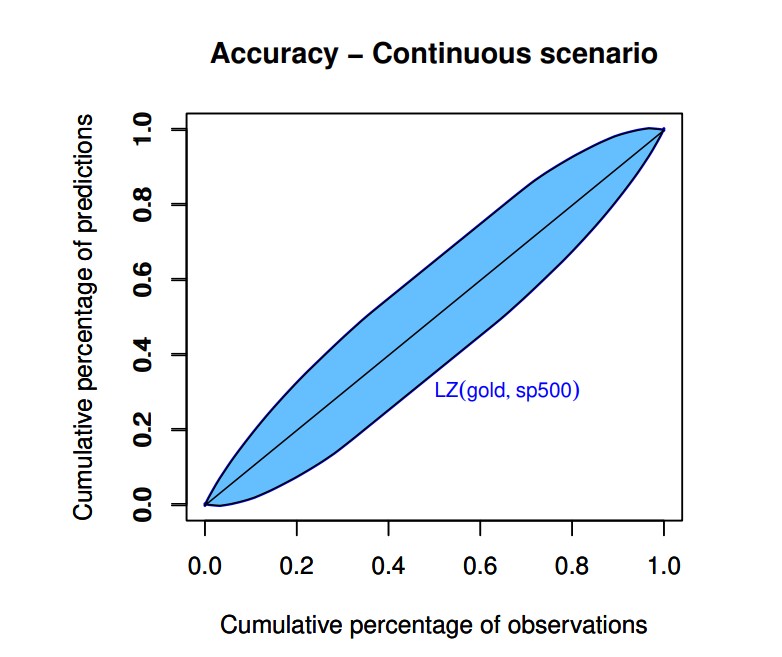

Fig. 3 shows the Lorenz Zonoid of the machine learning model selected by our proposed feature selection procedure, based on the comparison between Lorenz Zonoids. | |||

[[File:Sustainable Accurate Fair Explainable Machine Learning Models Giudici Raffinetti Fig3.jpg|centre]] | |||

<div style="text-align: center;">Figure 3: Accuracy of the selected model, in terms of its Lorenz Zonoid, for a continuous response.</div> | |||

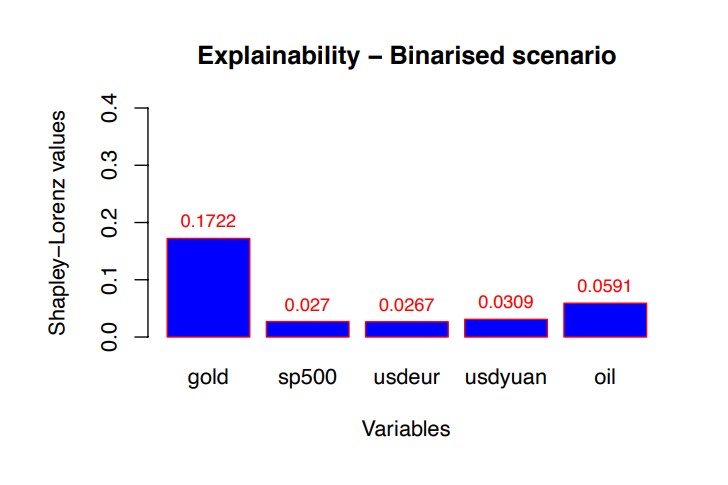

Note that the model selected in Fig. 3 contains Gold and SP500 as the relevant predictors. For robustness, we have repeated the analysis binarising the response variable around the zero value. The advantage of our proposed methodology is that no changes of metrics is equested to repeat the assessment of trustworthy AI, although the nature of the response variable has changed. For lack of space, we present only the results in terms of explainability, in Fig. 4. | |||

[[File:Sustainable Accurate Fair Explainable Machine Learning Models Giudici Raffinetti Fig4.jpg|centre]] | |||

<div style="text-align: center;">Figure 4: Explainability of the considered explanatory variables, in terms of the Shapley-Lorenz measure for a binary response.</div> | |||

From Fig. 4 note that Gold price returns is confirmed as the most important variable, but the second important variable is the Oil price, rather than SP500. | |||

== Conclusions == | |||

In the paper we propose a set of statistical measures that can ensure an overall assessment of the trustworthiness of AI applications. The application of the proposed scores to a neural network model, used to predict bitcoin price returns in terms of a set of classical financial variables, shows the practical utility of our approach. | |||

== References == | |||

<references/> | |||

Revision as of 10:06, 11 April 2023

Paolo Giudici(a) and Emanuela Raffinetti(b)

(a) Department of Economics and Management, University of Pavia, Via San Felice al Monastero, 27100 Pavia, Italy; paolo.giudici@unipv.it

(b) Department of Economics and Management, University of Pavia, Via San Felice al Monastero, 27100 Pavia, Italy; emanuela.raffinetti@unipv.it

| Download PDF |

| Published |

| April 11, 2023 |

| Title |

| Sustainable, Accurate, Fair and Explainable Machine Learning Models |

| Authors |

| Paolo Giudici(a) and Emanuela Raffinetti(b) |

Abstract

Machine learning models are currently favouring Artificial Intelligence applications in several fields, such as for instance, in finance. Through the employment of machine learning models, high predictive accuracy is achieved but at the expense of interpretability. The loss of explainability represents a crucial issue, especially in regulated industries, as authorities may not validate Artificial Intelligence methods if they are unable to monitor and limit the risks deriving from them. For this reason and according to the proposed regulations, high-risk Artificial Intelligence applications based on machine learning must be “trustworthy” and fulfill a set of basic requirements. In this paper, we propose a methodology based on Lorenz Zonoids to assess whether a machine learning model is S.A.F.E.: Sustainable, Accurate, Fair and Explainable.

Introduction

Data driven Artificial Intelligence (AI), boosted by the availability of Machine Learning (ML) models, is rapidly expanding and changing financial services. ML models typically achieve a high accuracy, at the expense of an insufficient explainability[1][2]. Moreover, according to the proposed regulations, high-risk AI applications based on machine learning must be “trustworthy”, and comply with a set of further requirements, such as Sustainability and Fairness.

To date there are no standardised metrics that can ensure an overall assessment of the trustworthiness of AI applications in finance. To fill the gap, we propose a set of integrated statistical methods, based on the Lorenz Zonoid, the multidimensional extension of the Gini coefficient, that can be used to assess and monitor over time whether an AI application is trustworthy. Specifically, the methods will measure Sustainability (in terms of robustness with respect to anomalous data), Accuracy (in terms of predictive accuracy), Fairness (in terms of prediction bias across different population groups) and explainability (in terms of human understanding and oversight). We apply our proposal to an openly downloadable dataset, that concerns financial prices, to make our proposal easily reproducible.

Methodology

Lorenz Zonoids were originally proposed by[3] as a generalisation of the Lorenz curve in a multidimensional setting. When referred to the one-dimensional case, the Lorenz Zonoid coincides with the Gini coefficient, a measure typically used for representing the income inequality or the wealth inequality

within a nation or a social group[4][5]. Both the Gini coefficient and the Lorenz Zonoid measure statistical dispersion in terms of the mutual variability among the observations, a metric that is more robust to extreme data than the standard variability from the mean.

Given a variable [math]\displaystyle{ Y }[/math] and [math]\displaystyle{ n }[/math] observations, the Lorenz Zonoid can be defined from the Lorenz ([math]\displaystyle{ L_Y }[/math]) and the dual Lorenz curves ([math]\displaystyle{ L'_Y }[/math])[5], whose graphical representations are provided in Fig. 1 [a].

The Lorenz curve for a variable [math]\displaystyle{ Y (L_Y) }[/math], obtained by re-ordering the [math]\displaystyle{ Y }[/math] values in non-decreasing sense, has points whose coordinates can be specified as [math]\displaystyle{ (i/n, \sum_{j=1}^i yr_j/(n\bar{y})) }[/math], for [math]\displaystyle{ i = 1, . . . , n }[/math], where [math]\displaystyle{ r }[/math] and [math]\displaystyle{ /bar{y} }[/math] indicate the (non-decreasing) ranks of [math]\displaystyle{ Y }[/math] and the [math]\displaystyle{ Y }[/math] mean value, respectively. Similarly, the dual Lorenz curve of [math]\displaystyle{ Y (L'_Y) }[/math], obtained by re-ordering the [math]\displaystyle{ Y }[/math] values in a non-increasing sense, has points with coordinates [math]\displaystyle{ (i/n, \sum_{j=1}^i yd_j/(n\bar{y})) }[/math], for [math]\displaystyle{ i = 1, . . . , n }[/math], where [math]\displaystyle{ d }[/math] indicates the (non-increasing) ranks of [math]\displaystyle{ Y }[/math]. The area lying between the [math]\displaystyle{ L_Y }[/math] and [math]\displaystyle{ L'_Y }[/math] curves corresponds to the Lorenz Zonoid, which coincides with the Gini coefficient in the one dimensional case.

From a practical view point, given n observations, the Lorenz Zonoid of a generic variable · is computed through the covariance operator as

| [math]\displaystyle{ LZ(·) = \frac{2Cov(·, r(·))}{nE(·)} }[/math] | (1) |

where [math]\displaystyle{ r(·) }[/math] and [math]\displaystyle{ E(·) }[/math] are the corresponding rank score and mean value, respectively.

The Lorenz Zonoid fulfills some attractive properties. An important one is the “inclusion” of the Lorenz Zonoid of any set of predicted values [math]\displaystyle{ \hat{Y} (LZ(\hat{Y})) }[/math] into the Lorenz Zonoid of the observed response variable [math]\displaystyle{ Y (LZ(Y)) }[/math]. The “inclusion property”, whose graphical representation is displayed in Fig. 1 [b], allows to interpret the ratio between the Lorenz Zonoid of a particular predictor set [math]\displaystyle{ \hat{Y} }[/math] and the Lorenz Zonoid of Y as the mutual variability of the response “explained” by the predictor variables that give rise to [math]\displaystyle{ \hat{Y} }[/math], similarly to what occurs in the well known variance decomposition that gives rise to the [math]\displaystyle{ R^2 }[/math] measure.

In this paper, we leverage the inclusion property to derive a machine learning feature selection method that, while maintaining a high predictive accuracy, increases explainability via parsimony and can also improve both sustainability and fairness. More precisely, we present novel scores for assessing both explainability and accuracy.

Given [math]\displaystyle{ K }[/math] predictors, a score for evaluating explainability can be defined as:

| [math]\displaystyle{ Ex }[/math]-[math]\displaystyle{ Score = \frac{\sum_{k=1}^K SL_K}{LZ(Y)} }[/math] | (2) |

where [math]\displaystyle{ LZ(Y) }[/math] corresponds to the response variable [math]\displaystyle{ Y }[/math] Lorenz Zonoid-value, and [math]\displaystyle{ SL_k }[/math] denotes the Shapley-Lorenz values associated with the [math]\displaystyle{ k }[/math]-th predictor. It is worth noting that, as illustrated in[6], the Shapley-Lorenz contribution associated with the additional included variable [math]\displaystyle{ X_k }[/math] equals to:

| [math]\displaystyle{ LZ^{X_k} (\hat{Y}) = \sum_{X'⊆C(X)/X_k} \frac{|X'|!(K − |X'| − 1)!}{K!}[LZ(\hat{Y}_{X'∪X_k}) - LZ(\hat{Y}_{X'})] }[/math] | (3) |

where [math]\displaystyle{ LZ(\hat{Y}_{X'∪X_k}) }[/math] and [math]\displaystyle{ LZ(\hat{Y}_X') }[/math] describe the (mutual) variability of the response variable [math]\displaystyle{ Y }[/math] explained by the models which, respectively, include the [math]\displaystyle{ X'∪X_k }[/math] predictors and only the [math]\displaystyle{ X' }[/math]predictors.

In a similar way, and following a cross-validation procedure consisting in splitting the whole dataset into a train and a test set, the accuracy of the predictions generated by a ML model can be derived as:

| [math]\displaystyle{ Ac }[/math]-[math]\displaystyle{ Score = \frac{LZ(\hat{Y}_{X_1,...,X_k})}{LZ(Y_{test})} }[/math] | (4) |

where [math]\displaystyle{ LZ(\hat{Y}_{X_1,...,X_k}) }[/math] is the Lorenz Zonoid of the predicted response variable, obtained using [math]\displaystyle{ k }[/math] predictors on the test set, and [math]\displaystyle{ LZ(Y_{test}) }[/math] is the Y response variable Lorenz Zonoid value computed on the same test set.

By exploiting the Shapley-Lorenz values and the set of the predictors which allow to ensure a suitable degree of predictive accuracy, appropriate scores for measuring both fairness and sustainability can be formalised.

Data

The considered data are described in[7] and are aimed to understand whether and how bitcoin price returns vary as a function of a set of classical financial explanatory variables.

A further investigation of the data was carried out in a work by[6], who introduced a normalised Shapley measure for the assessment of the contribution of each additional predictor, in terms of Lorenz Zonoids.

The data include a time series of daily bitcoin price returns in the Coinbase exchange, as the target variable to be predicted, and the time series of the Oil, Gold and SP500 return prices, along with those of the exchange rates USD/Yuan and USD/Eur, as candidate explanatory variables.

The aim of the data analysis is to employ the proposed S.A.F.E. metrics derived from the Lorenz Zonoid tool as criteria for measuring the SAFEty of a collection of machine learning models, based on the application of neural networks.

For lack of space, we present only the results that concern explainability, in Fig. 2; and accuracy, in Fig. 3. Both are calculated on the predictions obtained from the application of a neural network model to the data.

Fig. 2 shows the Shapley-Lorenz measure of explainability[6], which is a normalised extension of the classic Shapley values, for all considered explanatory variables of the daily bitcoin price returns. Fig. 2 clearly highlights that the price returns of Gold is the most important variable that explains bitcoin price return variations, followed by the others.

Fig. 3 shows the Lorenz Zonoid of the machine learning model selected by our proposed feature selection procedure, based on the comparison between Lorenz Zonoids.

Note that the model selected in Fig. 3 contains Gold and SP500 as the relevant predictors. For robustness, we have repeated the analysis binarising the response variable around the zero value. The advantage of our proposed methodology is that no changes of metrics is equested to repeat the assessment of trustworthy AI, although the nature of the response variable has changed. For lack of space, we present only the results in terms of explainability, in Fig. 4.

From Fig. 4 note that Gold price returns is confirmed as the most important variable, but the second important variable is the Oil price, rather than SP500.

Conclusions

In the paper we propose a set of statistical measures that can ensure an overall assessment of the trustworthiness of AI applications. The application of the proposed scores to a neural network model, used to predict bitcoin price returns in terms of a set of classical financial variables, shows the practical utility of our approach.

References

- ↑ Bussmann, N., Giudici, P., Marinelli, D., Papenbrock, J.: Explainable Machine Learning in Credit Risk Management. Comput. Econ. 57, 203–216 (2020) doi: 10.1007/s10614-020-10042-0

- ↑ Bracke, P., Datta, A., Jung, C., Shayak, S.: Machine learning explainability in finance: an application to default risk analysis (2019)

- ↑ Koshevoy, G., Mosler, K.: The Lorenz Zonoid of a Multivariate Distribution. J. Am. Stat. Assoc. 91, 873–882 (1996). doi: 10.2307/2291682

- ↑ Gini, C.: On the measure of concentration with special reference to income and statistics. General Series 208, pp. 73-79. Colorado College Publication (1936)

- ↑ 5.0 5.1 Lorenz, M.O.: Methods of measuring the concentration of wealth. Publications of the American Statistical Association 70, 209-219 (1905) doi:10.2307/2276207

- ↑ 6.0 6.1 6.2 Giudici, P., Raffinetti, E.: Shapley-Lorenz eXplainable Artificial Intelligence. Expert Syst. Appl. 167, 1–9 (2021) doi: 10.1016/j.eswa.2020.114104

- ↑ Giudici, P., Abu-Hashish, I: What determines bitcoin exchange prices? A network var approach. Financ. Res. Lett. 28, 309–318 (2019). doi: 10.1016/j.frl.2018.05.013]